He didn’t explicitly say it, but the implication in Paresh Kharya’s statements were clear. Someday – potentially very soon – AI models are going to get so large that today’s go-to AI hardware architecture that combines x86 CPUs with a stable of GPU accelerators will have to be rethought from the ground up, Kharya, Nvidia’s senior director of product management and marketing, told reporters in a video conference last week, previewing all the announcements the company is making at this week’s virtually held Nvidia GTC event that kicked off Monday.

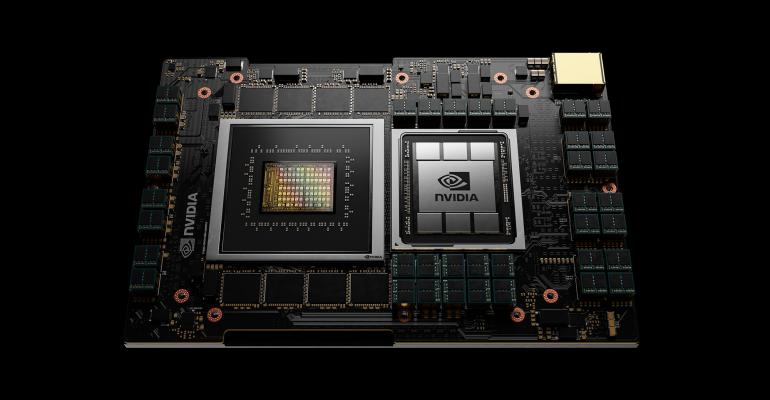

Going with that thesis, the company has given itself a head start on the rethinking. On Monday it announced Grace, an upcoming Nvidia data center CPU, result of a combined “10,000 engineering years of work,” according to a company statement. Based on a future Arm Neoverse processor architecture that has yet to be revealed, it is being designed for extremely tight coupling with Nvidia GPUs, removing any “bottlenecks” that exist in the interconnection of today’s x86 CPUs and the accelerators, Kharya said.

Nvidia agreed to acquire Arm for $40 billion last year, but the deal is going through a thorny international regulatory approval process, and a successful close appears far from certain.

The Nvidia Grace CPU is meant to address “the growing size and complexity of AI models,” he said. Just in the last two years, models went from 100s of millions of parameters to 175 billion parameters. Google Brain researchers claimed in a paper earlier this year that they managed to train a 1.6-trillion-parameter model using Google’s internally developed TPU processors.

With Grace, Nvidia, which has never produced a data center CPU, is eyeing the day when trillion-parameter models become commonplace. That’s when the current state-of-the-art architecture just won’t cut it, Parya said. A system powered by Grace will train a 1 trillion-parameter NLP model 10 times faster than today’s fastest Nvidia DGX computers, which are designed for AI and run on x86 CPUs, the company said.

The designers’ focus was on increasing the number of GPUs a single CPU can work with and improving CPU access to GPU memory, Parya said. It essentially enables the CPU to treat GPU memory as its own, he said.

Enabling that fast CPU-to-GPU link is Nvidia’s NVLink interconnect technology, an alternative to PCIe in x86-based architectures, which the company says provides a 900Gbps connection between the two processors. Another key technology in Grace is an LPDDR5x memory subsystem, which according to Nvidia doubles the bandwidth that’s possible with DDR4 memory and provides 10x DDR4’s energy efficiency.

Nvidia is expecting to make the first Grace chips available in early 2023. Two supercomputers running on Grace CPUs and Nvidia GPUs are expected to come online that year as well. One will be at the Swiss National Supercomputing Centre and the other at the US Department of Energy’s Los Alamos National Laboratory. Both systems will be built by Hewlett Packard Enterprise, Nvidia said.